Share this

Table of Contents

- A New Chapter in Software Creation

- Vibe Coding: A New Developer Experience

- MCP: The Bridge Between AI & Infrastructure

- What AI-Powered Software Delivery Looks Like

- Why It Matters for Organizations

- The Human Shift: How Roles Are Evolving

- Disrupting the Services Model

- Real-World Use Cases

- Why Itential Platform & IAG5 Matter

- From Prompt to Production

A New Chapter in Software Creation

For decades, software delivery followed a familiar pattern: gather requirements, plan sprints, write code, test, and deploy — all through human-driven handoffs. But that playbook is changing fast.

Enter CodeLLMs: Large Language Models fine-tuned for software engineering. These aren’t just copilots — they’re evolving into autonomous contributors capable of generating logic, writing tests, committing to Git, and registering services. Think of them as on-demand software engineers — scalable, auditable, and always-on.

But this isn’t about replacing people. It’s about amplifying them.

Developers shift from coders to curators. Architects evolve into flow designers. Reviewers become policy enforcers. AI writes the first draft. Humans give it meaning.

Vibe Coding: A New Developer Experience

Today’s developer experience is no longer about scripting line-by-line — it’s about expressing intent. With platforms like Itential and Automation Gateway (IAG), developers describe outcomes in natural language, and those prompts get translated into versioned, governed automation. It’s a shift from syntax to semantics — and from manual scripting to creative orchestration.

This works because the entire stack is built on rails: promptable infrastructure, secure service registration, and GitOps consistency.

The result? Creative, intuitive, and enterprise-ready workflows.

MCP: The Bridge Between AI & Infrastructure

The Model Context Protocol (MCP) is the foundation that enables AI systems to take action. It abstracts infrastructure into a structured format AI can safely interact with — converting prompts into secure execution.

Itential uses MCP to allow AI to:

📁 Read from and commit to Git repos.

🧩 Call services like native CLI or API functions.

⚙️ Register and execute automation through standard interfaces.

The result is a unified, AI-aware infrastructure where Jenkins, Claude, and your LLMs follow the same protocol to act — with traceability and governance baked in.

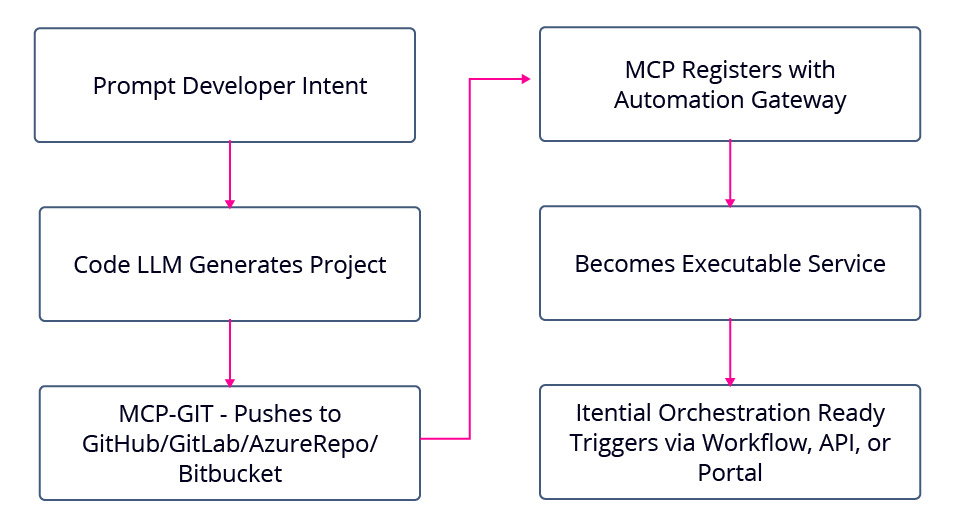

What AI-Powered Software Delivery Looks Like

Imagine this: An engineer prompts an LLM to generate an automation script. The model pushes it to GitHub via MCP, registers the service with IAG5, and it’s instantly callable from CLI tools, portals, or workflows like ServiceNow — all within minutes. The human stays in control, validating and approving each step, while the heavy lifting is handled by the system.

Why It Matters for Organizations

Organizations are reaping major benefits from this new model:

- Cost Control: Reduces dependency on services vendors or large teams.

- Speed: Deploy services in under 10 minutes.

- Security: PR-reviewed, scanned, and versioned LLM output.

- Reuse: Logic lives in GitHub — reusable and accessible.

- Knowledge Management: Institutional know-how codified in prompts and repos.

- Shadow IT Control: Everything governed, versioned, and executable from approved platforms.

The Human Shift: How Roles Are Evolving

The shift to AI-generated automation doesn’t eliminate jobs — it evolves them. Engineers become prompters and curators. Architects design flows instead of monolithic plans. Ops teams go from executing code to triggering AI-generated services. Security starts early — baking policy into the prompt layer.

It’s not about less work. It’s about smarter work, and more impact.

Disrupting the Services Model

Traditional consulting models — long timelines, high costs, inconsistent quality — can’t keep up with the scale and speed of AI-powered delivery.

With CodeLLMs, organizations can:

⚙️ Generate automation in parallel across domains.

📚 Build and share prompt libraries that scale.

🔁 Enforce quality and compliance through Git + CI/CD.

🧩 Enable vendors to deliver frameworks, not just code.

The result? Faster delivery, lower costs, and a more self-sufficient automation culture.

Real-World Use Cases

🌐 VLAN Cleanup

LLM generates script to identify unused VLANs across Cisco, Arista, and Juniper – Outputs removal commands, commits repo, registers service – Removed 32 VLANs across 14 devices

Saved ~20 hours of manual work

See the Git repo →

🔐 IAM Key Rotation

LLM creates a script to rotate AWS IAM keys older than 90 days – Code pushed to GitHub, registered as a service in IAG5 – Executed via ServiceNow

Time to value: ~8 minutes

See the Git repo →

Why Itential Platform & IAG5 Matter

The Itential Platform with Automation Gateway plays a crucial role in operationalizing CodeLLM-driven contributions:

- Governed Execution: Runtime policies, memory constraints, version pinning.

- GitOps Native: Services sourced from version-controlled repos.

- Security & Compliance: RBAC, approval gates, DevSecOps pipelines.

- Reusability & Scale: Services discoverable and composable across teams.

- Multi-Domain Automation: Network, cloud, security — all orchestrated together.

- Observability: Track logs, outcomes, usage — audit and improve.

- Visual Orchestration: Drag-and-drop workflows in Design Studio.

- Agent-Aware Workflows: One LLM writes logic, another validates it, another closes the ticket.

Itential turns scattered scripts and manual tasks into intent-driven, AI-compatible workflows — usable by anyone, auditable by everyone, scalable across everything.

Metrics That Matter

- Time to first draft: Hours → minutes

- % of LLM code merged without major rewrite

- Prompt reuse rate across teams

- % of services initiated by LLM

- Cost per script: $2,000 → <$20

Governance That Scales

Itential brings enterprise guardrails to AI:

- Prompt templates define structure and policy.

- GitHub enforces PR reviews, tests, scans.

- Scoped repo permissions.

- Execution caps, audit logs, and rollback built-in.

From Prompt to Production

The way we automate is being rewritten — not by scripts, but by AI-generated intent. And that intent needs a secure, compliant path to production.

The Itential platform, powered by the MCP Server and Automation Gateway, makes that path real. It turns LLM output into governed automation — transforming infrastructure from a bottleneck into a strategic enabler.

This is the future of DeveloperOps: a world where developers orchestrate with prompts, AI executes within policy, and platforms like Itential connect it all together.

AI is ready to act — but in enterprise environments, action needs accountability. The Itential Platform ensures every AI-driven decision is executed with security, compliance, and control.