Share this

Table of Contents

- Beyond Simply Bolting on an API: The Rise of Model Context Protocol (MCP)

- What is MCP & How Did It Change Tool Calling?

- The Art of Good MCP Server Design: Optimizing Context for LLMs

- What Makes a Good MCP Tool Description?

- The Context Conundrum: Too Many Tools, Too Little Memory

- What Makes Itential’s MCP Server Design Enterprise-Grade

- Toward a Multi-Agent Future: Optimizing Performance & Preventing Hallucinations

- The Future of AI Integration: Where Every Token Counts

When I first started dabbling with large language models (LLMs), my primary goal was straightforward: utilize the LLMs as the tool they are, ask precise questions, extract specific knowledge, and receive direct answers. Simple, right? But as I delved deeper, my understanding began a significant shift. I realized the immense, underlying importance of context management, especially when it comes to maintaining the precise topic and flow of a given conversation or interaction.

This realization became even more pronounced as I started building custom agents and working with code. It became undeniably clear that context needs to be managed with extreme care, particularly in the meticulous construction of system prompts.

Beyond Simply Bolting on an API: The Rise of Model Context Protocol (MCP)

My journey naturally led me to explore tool calling. The idea of exposing an API’s output directly to an LLM was fascinating and seemed to hold immense potential. However, I quickly encountered a significant hurdle: simply bolting on an API wasn’t enough. Standard APIs, as they exist today in most products, are often not structured to provide the kind of meaningful, decision-ready content that LLMs truly need to make informed determinations. By integrating an API as-is, I faced multiple challenges, having to iterate over extremely complex prompts and quickly facing the inevitable “Prompt Hell.”

Once Model Context Protocol (MCP) emerged, things changed, and oh my, what a change. MCP represented a fundamental shift in how LLMs interact with external systems and made it easier to build tools and proliferate this problem.

What is MCP & How Did It Change Tool Calling?

At its core, MCP addresses the problem of connecting LLMs to diverse data sources and tools in a standardized and efficient way. Traditionally, integrating LLMs with various APIs required writing custom code for each specific combination, leading to complex and inefficient systems as the number of LLMs and data sources grew.

MCP changes this by providing a unified, reusable layer for tool integration:

- Standardized Communication: MCP offers a consistent way for LLMs to interact with various tools and services, regardless of their underlying implementation. This universal language for tool interaction is paramount for effective context.

- Reduced Complexity: It eliminates the need for bespoke integration code for every LLM-data source pair, significantly simplifying development and deployment.

- Increased Reusability & Scalability: A single MCP server can manage connections to multiple tools, and a single LLM can interact with numerous MCP servers, allowing for independent scaling to handle increased load.

- Dynamic Tool Discovery: Agents can discover and use tools at runtime, rather than being limited to a predefined set. This is a crucial step toward more autonomous AI systems.

What is MCP?

MCP is a standardized, vendor-agnostic communication framework that lets AI models or agents talk to external data sources, tools, and services in a clean, secure, and predictable way.

Think of an MCP server like a USB port. Just as a USB port allows different devices (printers, keyboards, external drives) to connect to a computer through a single standard connection, MCP enables various tools and LLMs to interact seamlessly through a standardized protocol. This standardization is fundamental for managing the context of tools for LLMs.

The Art of Good MCP Server Design: Optimizing Context for LLMs

While MCP provides the framework, its true power in optimizing context for LLMs lies in its proper design and implementation. As I learned early on, even before MCP was formalized, a critical step is often to filter the output of an API. This ensures the LLM receives only the most relevant and meaningful data it can leverage for its insights, avoiding irrelevant noise that consumes valuable context memory.

This brings us to the crucial concept of “Tool != API.” An MCP tool function and its response do not necessarily equate to a single underlying API call; it could involve orchestrating multiple API calls. The response, too, must be thoughtfully constructed to provide the LLM with sufficient context for inference and decision-making. For instance, if an API output returns a mere ID instead of a descriptive name (e.g., “user_id: 12345” instead of “user_name: John Doe, user_email: john.doe@example.com”), that’s rarely ideal for an LLM seeking to understand and act.

Good MCP design is subjective, but I have an opinion on how to approach it. Proper MCP servers should include:

- Concise, Precise Tool Descriptions: Every word counts. The description should tell the LLM exactly what the tool is and does. No more, no less.

- Clear Usage Instructions: Provide clear guidance on how and when to use the tool.

- Mindful of LLM Context Memory: This is paramount. Overly verbose or redundant descriptions consume valuable context memory, which is a significant constraint for LLMs.

What Makes a Good MCP Tool Description?

From hands-on experience, here are key considerations for crafting effective MCP tool descriptions:

- Concise Yet Informative Purpose: Clearly state what the tool does in a single sentence or two. This is the LLM’s primary gateway to understanding the tool’s function.

- Explain Why the Tool is Useful (Use Case): Briefly articulate the benefit or problem the tool solves from an operational perspective. This helps the LLM understand the tool’s relevance within a broader workflow.

- Clear & Specific Arguments (Inputs): List each required input parameter with its expected data type and a clear, concise description. Crucially, if valid values are dependent on another tool (e.g., “Use get_devices to see available devices”), indicate that.

- Explicit Returns (Outputs): Describe the format and content of the tool’s output. This empowers the LLM to parse and utilize the information effectively.

- Focus on LLM Decision-Making: The description’s ultimate goal is to provide enough detail for the LLM to decide when to use the tool, what inputs to provide, and how to interpret the output.

- Avoid Internal Implementation Details: Resist the urge to include technical minutiae about the underlying platform or how the tool is internally implemented. The LLM needs to know what it can do, not how it’s done. This saves valuable context memory.

- Be Mindful of Context Window: Reinforce that long, rambling descriptions significantly impact the LLM’s limited context memory, potentially leading to performance degradation, confusion, or errors.

The Context Conundrum: Too Many Tools, Too Little Memory

Another critical aspect of MCP implementation is how tools are onboarded into an agent. Typically, all tools from a particular MCP server are onboarded simultaneously. I’ve frequently observed a tendency to onboard 10, 15, or even more MCP servers into a single agent. This often leads to significant confusion and context overload for the LLM.

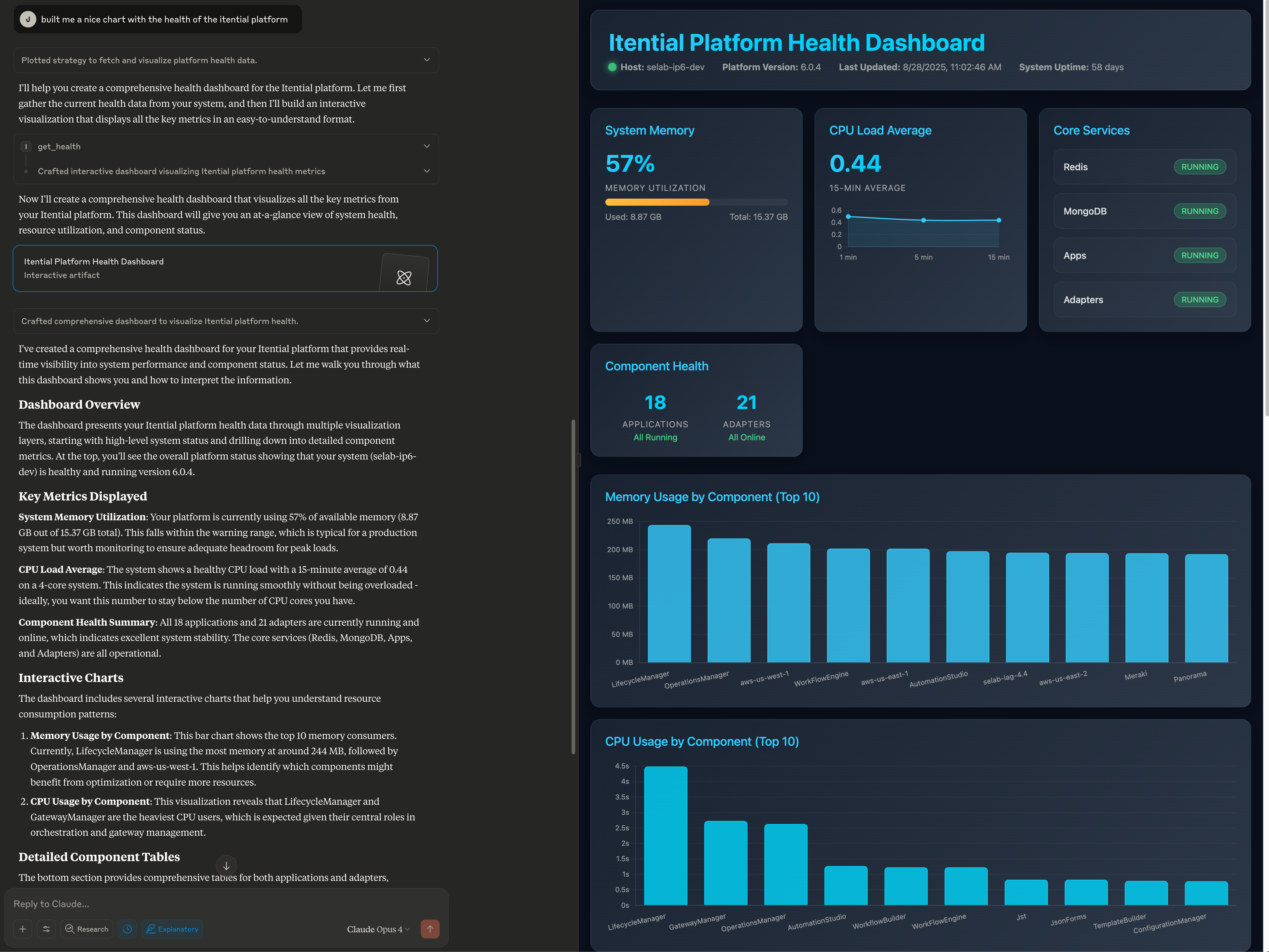

When the team was designing the Itential MCP server, it became abundantly clear that we needed to build better tools that truly catered to the specific needs of users, user types, and personas, rather than simply converting every single API into a tool. This meant a shift in mindset: focusing on the context a particular user or persona would require. While a single MCP server might technically expose 40 tools, for example, a given user or persona might only need 10 of them. These are crucial patterns that must be considered during the design of both MCP servers and the associated tooling. Furthermore, our tool descriptions were carefully crafted to avoid verbosity while simultaneously providing enough precise detail for the LLM to make accurate inferences and decisions without consuming excessive context memory. To address the challenge of context overload directly, we’ve also added functionality to allow AI system designers to explicitly pick and choose which tools a particular agent gets to onboard. This capability, supported by tagging and explicit tool include/exclude features, empowers designers to carefully enable only the tools relevant to each agent, given its specific persona.

The tool descriptions and MCP server use instructions represent a lot of context that is ingested by the agent when onboarding MCP tools. The sheer volume of this text, if not carefully managed, can quickly exhaust the LLM’s context memory. You’ve likely experienced this: you onboard many tools, ask the LLM a question, and after the first tool executes, others run out of context memory or throw “too busy” errors. This is a direct consequence of inadequate context management.

What is the Itential MCP Server?

The Itential MCP Server is a governance and orchestration layer built on the Model Context Protocol (MCP) that connects AI agents / LLMs / AIOps tools to the Itential Platform.

Its primary purpose is to allow AI-generated “intent” (e.g. proposals for configuration changes, workflow tasks, remediation, etc.) to be translated into real, production infrastructure actions safely, with compliance, policy enforcement, validation, visibility, and auditability.

What Makes Itential’s MCP Server Design Enterprise-Grade

The theoretical benefits of Model Context Protocol are clear, but translating them into a production-ready system requires meticulous engineering and a deep understanding of enterprise operational demands. The Itential MCP server represents a masterclass in this regard, combining thoughtful architecture with production-hardened features that set it apart from typical automation tools.

Here’s what makes this implementation truly robust and enterprise-grade:

Intelligent Role-Based Architecture for Optimized Context

At the heart of Itential’s design is a sophisticated, persona-based system that dynamically adapts to organizational needs. Rather than providing a one-size-fits-all interface with every available tool, the server intelligently configures itself based on tag filtering to present only the most relevant tools for specific roles – whether you’re a Platform SRE monitoring system health, a Platform Builder creating automation assets, an Automation Developer writing code, or a Platform Operator executing workflows. This isn’t just about access control; it’s a critical component of cognitive load management for the LLM, ensuring each agent (or persona it represents) sees exactly what it needs to be effective in its role, thereby drastically optimizing its immediate context.

Production-Hardened Tool Design

Every tool within Itential’s MCP system follows meticulously crafted patterns that demonstrate deep operational experience. The docstrings aren’t just documentation; they’re precise operational guides designed to help LLMs understand not just what a tool does, but crucially, when and why to use it effectively in an operational flow. Beyond their descriptions, these tools incorporate comprehensive error handling, state validation (e.g., ensuring you can’t restart an already stopped application), and intelligent caching to minimize redundant API calls and improve efficiency. The attention to detail is evident in features like automatic timeout handling, graceful state transitions, and detailed, structured return specifications that make automation reliable and predictable in complex enterprise environments.

Enterprise-Grade Operational Features

Recognizing the diverse nature of enterprise environments, the Itential MCP Server supports three distinct transport protocols: stdio, SSE (Server-Sent Events), and Streamable HTTP. Each is optimized for different deployment scenarios. Need direct process communication for local development and rapid iteration? Use stdio. Building a web-based integration that requires real-time updates? SSE provides efficient streaming. For traditional request-response patterns and broad compatibility, Streamable HTTP has you covered. The configuration seamlessly adapts to each transport, automatically adjusting parameters like host, port, and path as needed, providing unparalleled deployment versatility.

The robust configuration system supports multiple sources (environment variables, command-line arguments, configuration files) with clear precedence rules, enhancing deployability and maintainability. Even the logging is thoughtfully implemented with configurable levels and structured output, ready for integration with enterprise logging systems.

Intelligent Context Management (Reinforced)

While context management is a theme throughout this discussion, the Itential MCP server specifically addresses it at an architectural level. Its lifespan management ensures platform connections and cache instances are properly initialized and cleaned up, preventing resource leaks. Tool discovery is automatic and driven by our robust tagging system, allowing dynamic composition of capabilities based on needs. The instructions provided to LLMs are inherently context-aware, guiding them to understand their operational scope based on the dynamically available tools. This creates a powerful, self-documenting system where the AI assistant can understand its precise role and capabilities without requiring extensive external configuration.

This isn’t just an MCP server; it’s enterprise grade software meticulously engineered to understand and manage the complexity of real-world network operations, providing the tools, structure, and reliability needed to automate at unparalleled scale.

Toward a Multi-Agent Future: Optimizing Performance & Preventing Hallucinations

This challenge of managing vast amounts of context and optimizing tool usage naturally leads us to the evolving paradigm of multi-agent design. The idea is to move beyond monolithic agents that attempt to handle every possible task and tool, toward a more thoughtful architecture where systems are designed to include multiple, specialized agents.

In this model:

- Persona-Driven Tool Mapping: Each individual “expert” agent is designed with a specific persona or role. This persona dictates the precise subset of tools it needs from one or various MCP servers, rather than onboarding everything. This focused approach dramatically reduces the context load on any single agent.

- Orchestration & Routing Agent: A central orchestrator or routing agent takes on the responsibility of intelligently routing incoming requests to the most appropriate expert agent. This agent acts as a dispatcher, ensuring that the right request reaches the right specialized toolset and context.

This multi-agent approach significantly improves and optimizes overall agent performance. By narrowing the context for each expert agent, it not only enhances efficiency but also drastically reduces the risk of hallucinations often caused by context overload and the LLM attempting to infer answers from an excessively broad and unmanageable pool of information.

The Future of AI Integration: Where Every Token Counts

The evolution from bolting on APIs to designing sophisticated MCP servers marks a fundamental shift in how we build intelligent systems. By treating context as a precious resource, carefully crafting tool descriptions, thoughtfully limiting tool exposure, and architecting specialized multi-agent systems, we move beyond the chaos of monolithic, confused agents toward focused, reliable insights. This isn’t just about technical optimization; it’s about creating AI systems that truly understand their operational domain and make informed decisions while avoiding hallucinations from context overload.

The path forward is clear: treat agents as we treat human resources, by embracing persona-driven architectures where specialized agents handle specific domains given their expertise. Organizations that master these principles, while understanding that every word in a tool description matters, that less can be more when it comes to tool exposure, and that distributed intelligence mirrors successful human organizations, will build AI systems that augment rather than confuse.